Mike (Michael) Gee

I recently graduated with my B.S. in Computer Science from the University of Southern California, where I am working with Prof. Yan Liu on Time Series Foundation Models and Prof. Swabha Swayamdipta with Prof. Yixin Wang from the University of Michigan on LLM evaluations.

My research develops data-centric approaches to ML where data plays an active role in guiding learning, inference, etc. instead of being passively consumed, and uses these approaches to service properties in ML systems like generalizability, explainability, and interpretability.

This manifests in several ways, like:

- Using text data to create self-supervised labels when fine-tuning language models to eliminate the need for costly human-annotated labels and improve data-efficiency

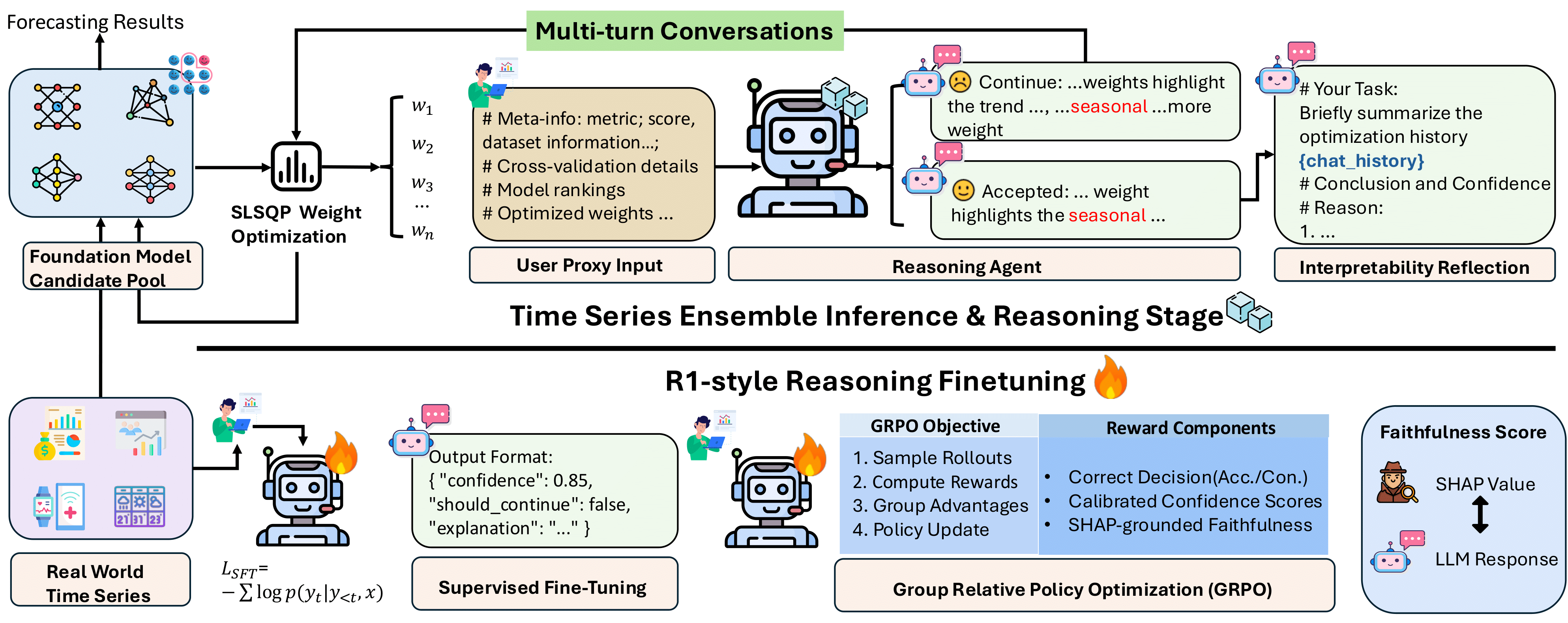

- Using time series’ temporal patterns to guide inference-time decision making in time series forecasting and improve generalizability and explainability

- Using samples in LLM benchmarks to identify fine-grained capabilities and improve interpretability in LLM evaluations and benchmark construction

I am also broadly interested in understanding the relationship between training data and model behavior, investigating how model capabilities emerge during training, and analyzing how training data affects downstream performance.

Here is my CV.

News

- Dec 2025: TSOrchestra, an agentic zero-shot forecasting framework I created with Defu Cao, achieved 1st place on Salesforce’s GIFT-Eval Time Series Forecasting Leaderboard